Method

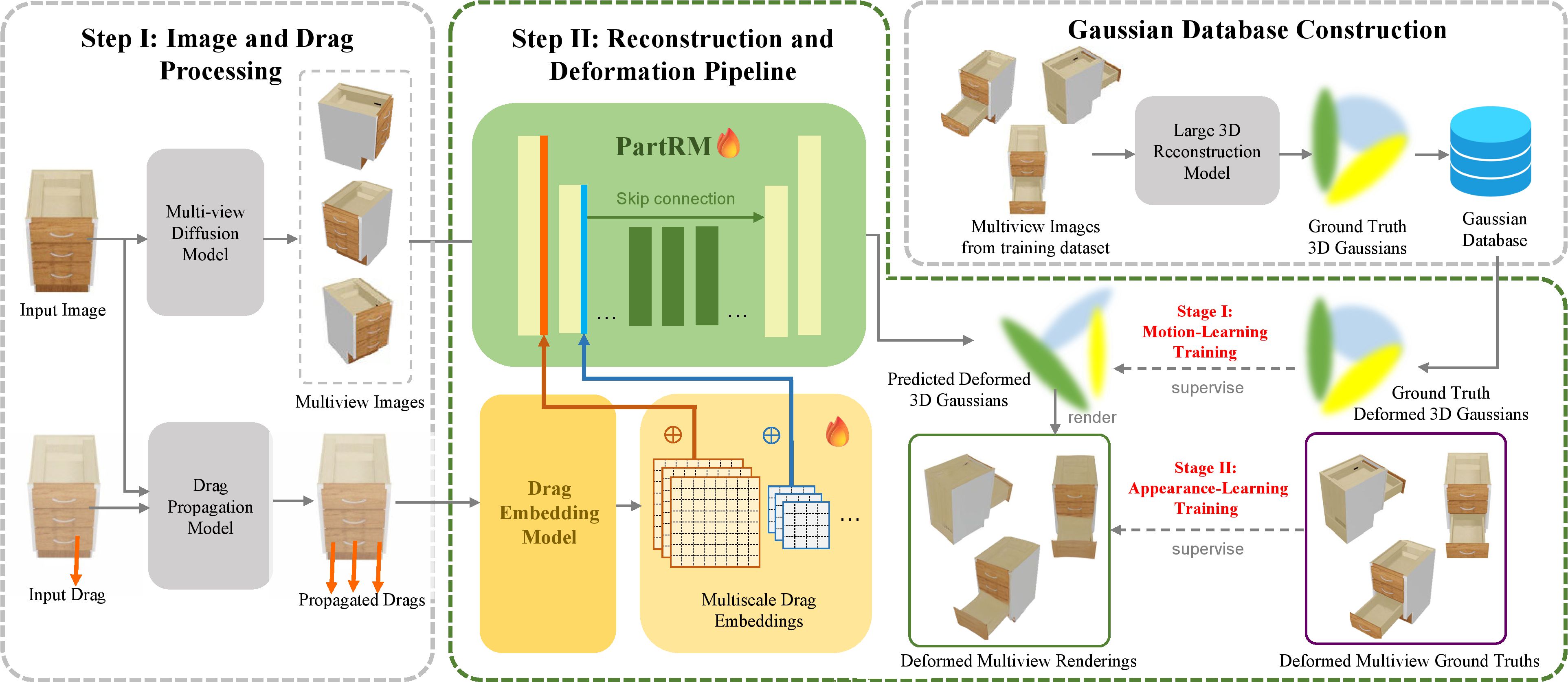

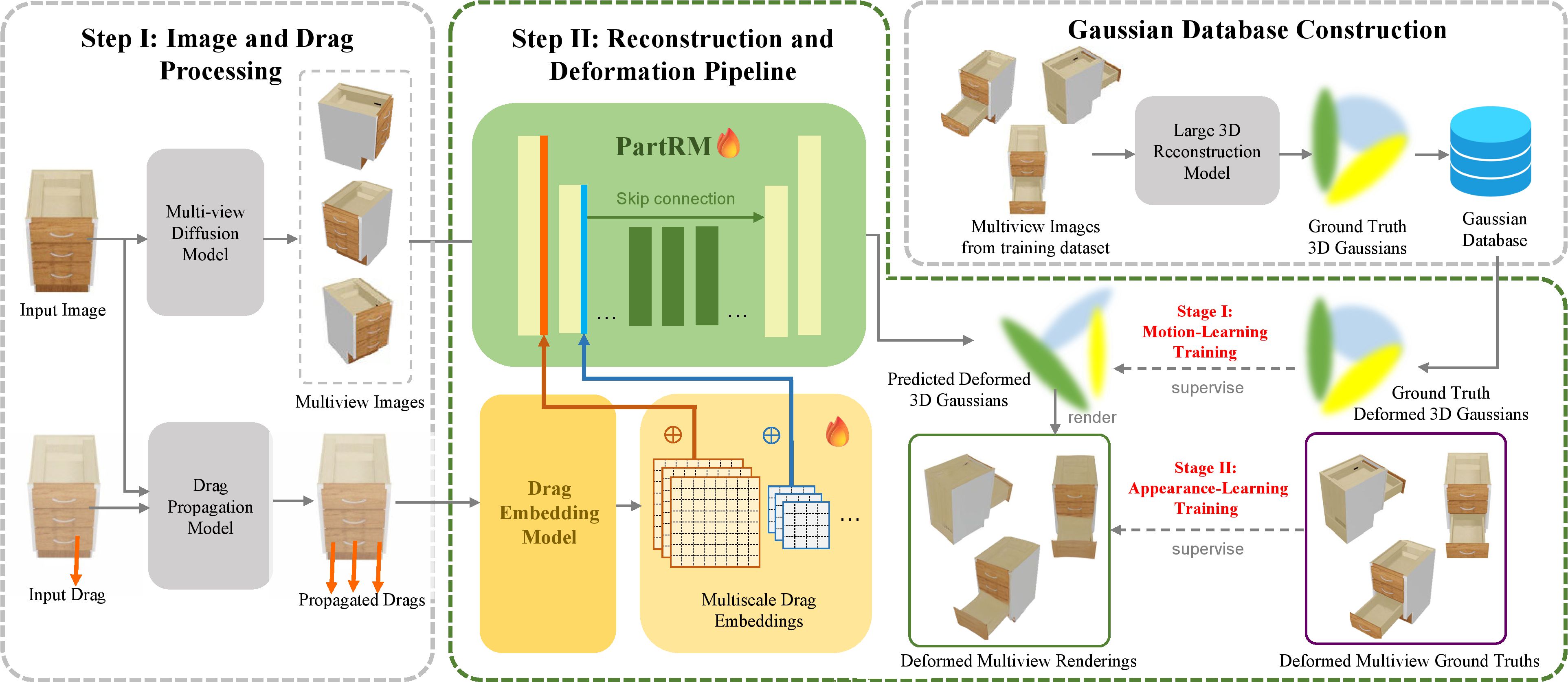

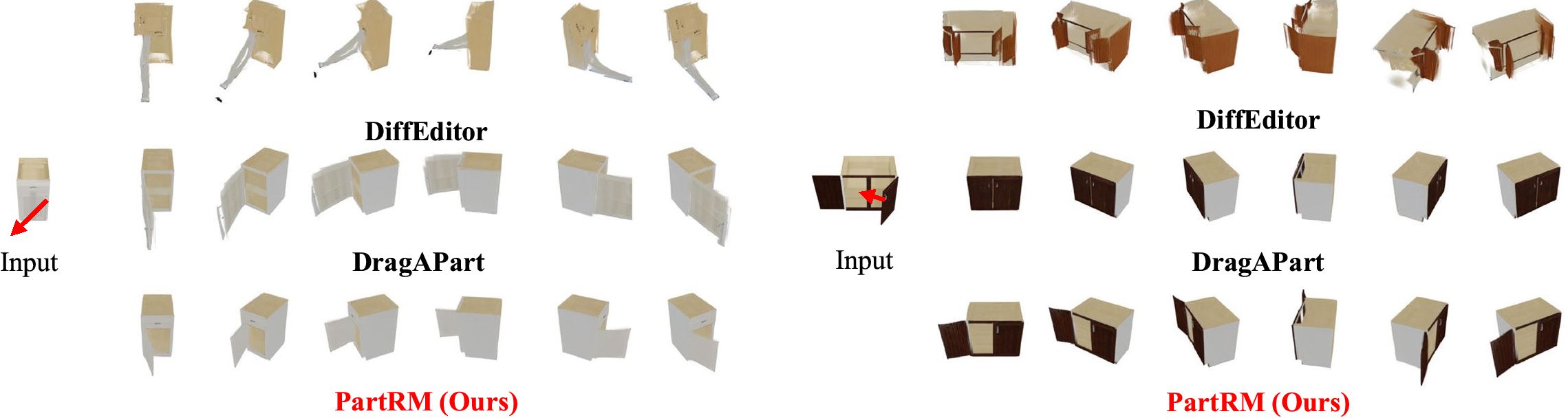

As interest grows in world models that predict future states from current observations and actions, accurately modeling part-level dynamics has become increasingly relevant for various applications. Existing approaches, such as Puppet-Master, rely on fine-tuning large-scale pre-trained video diffusion models, which are impractical for real-world use due to the limitations of 2D video representation and slow processing times. To overcome these challenges, we present PartRM, a novel 4D reconstruction framework that simultaneously models appearance, geometry, and part-level motion from multi-view images of a static object. PartRM builds upon large 3D Gaussian reconstruction models, leveraging their extensive knowledge of appearance and geometry in static objects. To address data scarcity in 4D, we introduce the PartDrag-4D dataset, providing multi-view observations of part-level dynamics across over 20,000 states. We enhance the model’s understanding of interaction conditions with a multi-scale drag embedding module that captures dynamics at varying granularities. To prevent catastrophic forgetting during fine-tuning, we implement a two-stage training process that focuses sequentially on motion and appearance learning. Experimental results show that PartRM establishes a new state-of-the-art in part-level motion learning and can be applied in manipulation tasks in robotics. Our code, data, and models are publicly available to facilitate future research.

| Method | Setting | PartDrag-4D | Objaverse-Animation-HQ | Time (↓) | ||||

|---|---|---|---|---|---|---|---|---|

| PSNR ↑ | SSIM ↑ | LPIPS ↓ | PSNR ↑ | SSIM ↑ | LPIPS ↓ | |||

| DiffEditor | NVS-First | 22.52 | 0.8974 | 0.1138 | 19.24 | 0.8988 | 0.0902 | 33.6s / 151.2s |

| DiffEditor | Drag-First | 22.34 | 0.9174 | 0.0918 | 19.46 | 0.9079 | 0.0842 | 11.5s / 128.8s |

| DragAPart | NVS-First | 24.27 | 0.9343 | 0.0690 | 19.38 | 0.8915 | 0.0873 | 21.4s / 139.7s |

| DragAPart | Drag-First | 24.91 | 0.9454 | 0.0567 | 19.44 | 0.9004 | 0.0885 | 8.5s / 119.4s |

| Puppet-Master | NVS-First | 24.20 | 0.9447 | 0.0579 | - | - | - | 64.9s / 187.5s |

| Puppet-Master | Drag-First | 24.42 | 0.9475 | 0.0528 | - | - | - | 245.8s / 361.5s |

| PartRM (Ours) | - | 28.15 | 0.9531 | 0.0356 | 21.38 | 0.9209 | 0.0758 | 4.2s / - |

@article{gao2025partrm,

title={PartRM: Modeling Part-Level Dynamics with Large 4D Reconstruction Model},

author={Mingju Gao, Yike Pan, Huan-ang Gao, Zongzheng Zhang, Wenyi Li, Hao Dong, Hao Tang, Li Yi, Hao Zhao},

journal={},

year={2025}

}